Allan Lichtman is Famous for Correctly Predicting the 2016 Election. The Problem? He Didn’t

You can read Professor Lichtman’s response here.

You can read our follow-up reporting debunking his later alleged timeline here.

Allan Lichtman is gearing up to make another prediction. Touted as the university professor who has accurately predicted every presidential election since 1984 based on his “13 Keys” system, the historian is set to release the 2024 edition of his signature book, Predicting the Next President: The Keys to the White House, on July 1. If history is any guide, he’ll also publish his official prediction for this year’s presidential election in the pages of Social Education sometime in the fall, in which he will go through his model step by step and foretell whether or not Joe Biden or Donald Trump will spend the next four years as commander-in-chief.

If you’re familiar with Lichtman, it’s probably because of his shock 2016 prediction, in which he insisted that Donald Trump would defeat a heavily favored Hillary Clinton. Trump’s eventual upset only added to Lichtman’s legend – his history-based model had succeeded where data-based models like FiveThirtyEight’s had seemingly failed, turning him into something of a minor celebrity.

Lichtman’s 13 Keys model has faced methodological criticism before, and we’ll be providing our own in this piece. But regardless of methodology, our chief concern with Lichtman is that, despite his claims of a perfect record, he has not been completely honest about what exactly he predicted in 2016.

After combing through Lichtman’s published work and interviews, we’ve come to two main conclusions: first, in 2016, Lichtman had predicted that Trump would win the popular vote; and second, after that result failed to materialize, he not only took credit for a correct prediction anyway, but was dishonest about the content of his own work, incorrectly and inconsistently claiming that the Keys had only been designed to predict the winner of the general election, not the popular vote, for every election held after 2000.

While this may be something of a niche concern, it matters a great deal to the two of us. The first reason is that, as election modelers ourselves, we believe that effective models can only be built, and lessons about the practice of modeling can only be learned, if modelers are completely honest with their audiences. The second is that we are also graduates of American University, the same school that employs Lichtman, and we care about our alma mater’s reputation. As such, we believe Lichtman’s model and claims deserve a thorough examination in the interest of both academic integrity and plain old honesty. What follows is our attempt to set the record straight regarding his model and predictions.

The 13 Keys

Let’s start at the beginning: Lichtman, a professor of history at American University, developed his predictive model with Vladimir Keilis-Borok, a Russian seismologist who developed a model for predicting earthquakes. Together, they wrote a paper outlining a model that suggested that presidential elections could be determined based on 12 binary factors. They based this approach on pattern recognition, suggesting that attempts to predict elections based on the “movement of voter blocs” and “the unique issues of an election” are less reliable than a broad overview of independent factors.

This ultimately became the “13 Keys,” a set of binary inquiries regarding the upcoming election. If five or fewer of the keys are “false,” then the incumbent party will win the presidency. If six or more are “false,” then the incumbent party will lose:

- Party mandate: After the midterm elections, the incumbent party holds more seats in the House of Representatives than after the previous midterm elections.

- No primary contest: There is no serious contest for the incumbent party nomination.

- Incumbent seeking reelection: The incumbent party candidate is the sitting president.

- No third party: There is no significant third party or independent campaign.

- Strong short-term economy: The economy is not in recession during the election campaign.

- Strong long-term economy: Real per capita economic growth during the term equals or exceeds average growth during the previous two terms.

- Major policy change: The incumbent administration effects major changes in national policy.

- No social unrest: There is no significant social unrest during the term.

- No scandal: The incumbent administration is not tainted by major scandal.

- No foreign/military failure: The incumbent administration does not have a major failure in foreign or military affairs.

- Major foreign/military success: The incumbent administration has a major success in foreign or military affairs.

- Charismatic incumbent: The incumbent party candidate is charismatic or a national hero.

- Uncharismatic challenger: The challenging party candidate is not charismatic or a national hero.

These have been unpacked to varying depths in Lichtman’s original and republished books on the system, The Thirteen Keys to the Presidency, The Keys to the White House: a Surefire Guide to Predicting the Next President, and – most recently – Predicting the Next President: The Keys to the White House.

Using these keys, Lichtman has supposedly “accurately predicted every election since 1984” and channeled this purported success into a buzzy media narrative sure to drum up clicks every four years when his next prediction is unveiled. But, the real story is far messier, primarily because he has papered over the fact that his model failed in the year he gets the most credit for: 2016.

Hasty Generalization and Probability

We’ll begin with a critique based on probability by submitting a simple inquiry: how hard would it be to actually predict ten presidential elections in a row?

We’ll handle this inquiry objectively, using some probabilities, and – cognizant of our own successful predictive record – be forthcoming about when data can easily be used to make a certain prediction look better than it may be, building from the most difficult to the least.

Mathematically, the probability of guessing a coin flip (or just randomly guessing which of the two major party candidates would win) right ten times in a row would be about 0.1%. If 1,000 people made predictions over ten consecutive elections just by flipping a coin, one of these people would be expected to have guessed them all right. This is the first flaw in placing great deference on any one person or system: given a limited sample size (and ten is a very limited sample size), there is a high probability that someone will have simply just been very lucky!

Consider us: we talk a big game about how we’ve successfully predicted the overall winner of the 2018, 2020, and 2022 Senate elections and the 2020 presidential election. Pretty good, for sure, but even if we just flipped a coin for each election, we’d still have a little over a 6% chance of getting them all right.

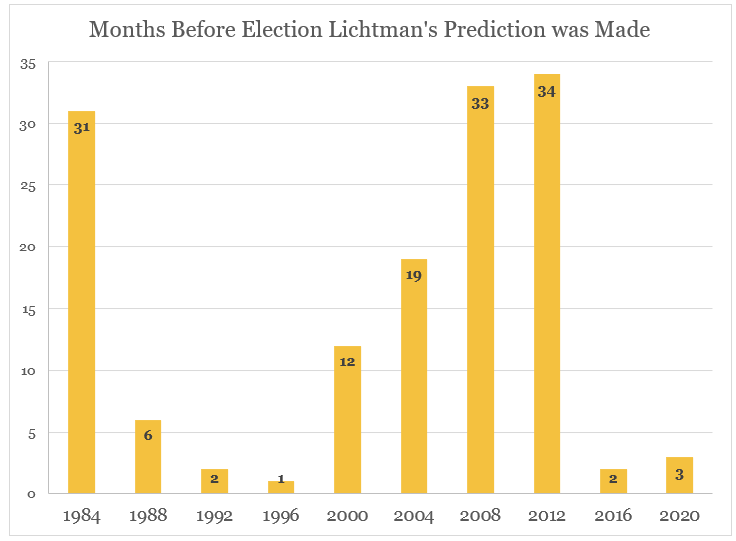

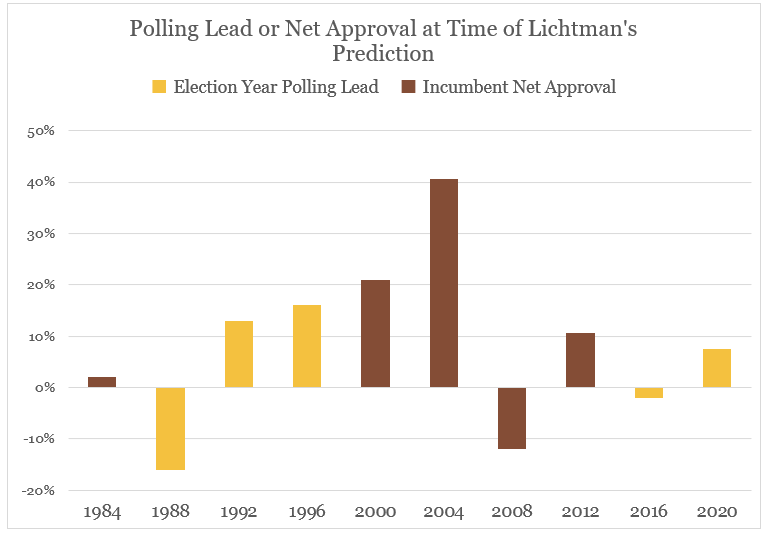

But some elections are easier to call than others, and therein lies the second probabilistic problem. Though Lichtman often releases his predictions months or years before the election, it’s rare that this is done with high levels of risk. Even conceding that making a prediction two years before votes are cast is impressive, note that both 1984 and 2008 were landslide elections where the winning party won by over 18 and seven points, respectively. Other elections, like those in the 1990s, 2016, and 2020, were made very close to Election Day, and ample polling leads were in place when they were made.

Don’t get us wrong – some of these predictions remain impressive; Lichtman successfully “called” that George H.W. Bush would win at a time when Michael Dukakis had a 16% polling lead. But, even if we only take out predictions made within less than three months before the election wherein one candidate had a polling lead of more than 5% (sorry, but calling that Bill Clinton was going to win in 1996 at a time when he had a 16% lead in the polls is not exactly rocket science), this becomes less impressive. Predicting that a Democrat might win in 2008 when a two-term incumbent Republican was fairly unpopular? That’s better than 50/50 odds, at least. Predicting a popular incumbent wins reelection in 1984 or 2004? That’s at least 70-30 odds. You get the point: suddenly ten “correct” calls become only a handful of genuinely interesting ones.

A 2023 paper by Andrew Gelman, Jessica Hullman, Christopher Wlezien, and G. Elliott Morris hit this critique on the nose: “The trouble with such an approach, or any other producing binary predictions, is that landslides such as 1964, 1972, and 1984 are easy to predict, and so supply almost no information relevant to training a model. Tie elections such as 1960, 1968, and 2000 are so close that a model should get no more credit for predicting the winner than it would for predicting a coin flip.” Essentially, given a coin toss and insight as to whether the election might be a landslide, the odds of getting it right are 50%-100%, but no worse – that tends to weigh in your favor in a small sample size such as the ten elections Lichtman has predicted.

Again: consider our own models. We’ve been statistically blessed in that pretty much every election we’ve covered was a close one where both parties had a chance – but we can drill down a little to make the same point: if you look only at our 2022 Senate election predictions, sure you can give us some credit for “correctly” calling Arizona, but you wouldn’t give us the same amount of credit for “correctly” calling California or North Dakota… it’s just not as impressive.

If probabilities lend to the illusion of both credibility and capability, it is the gaming of these probabilities that makes for the easiest complaint against Lichtman’s approach: it is predominantly subjective.

Convenient Subjectivity

Referring back to the keys themselves, the only truly objective factors are the party mandate, incumbency, and the short-term and long-term economy. These four at least have a relatively clear answer that is easy to discern, but that leaves a lot of wiggle room for factors like whether the incumbent or challenging candidate is “charismatic or a national hero,” whether there’s a foreign policy failure, or what exactly constitutes a “major change” in national policy.

Though the subjectivity is less of a benchmarking concern for Lichtman’s predictions made well over a year away from the election, as the election approaches, they become slippier. Back in 2011, Nate Silver (then of FiveThirtyEight) took Lichtman to task for this, frustrated by the fact that this system would allow Lichtman to deem a candidate as “charismatic” when they’re already ahead in the polls. Silver also criticized Lichtman’s claim that the model works for every historical election since 1860, as subjectively labeling a winning candidate as charismatic effects a superficial accuracy. This is a fair critique of any model that claims to work retroactively, but Lichtman isn’t famous for accurately predicting every election since 1860… only the last ten, so we won’t pile on. But suffice to say Lichtman’s claims of historical accuracy prior to 1984 are without any merit.“No other political theory or electoral model published to date can account, even retrospectively, for the outcomes of presidential elections since 1960… The Keys to the White House account for or predict the outcomes of all thirty-four elections since 1860,” reads the introduction to his 1996 book. The use of this argument to bolster a record is unjustifiable – with the benefit of hindsight, anyone could write a “model” that would correctly “predict” the outcome of all elections since 1788 based solely on this XKCD comic.

We will, however, express concerns about the subjectively of the model at large. For answers, we turned to Lichtman’s books. He addresses this criticism promptly, asserting there are three things which distinguish his keys from the “ad hoc judgments offered by conventional political commentators”:

- First, “decisions about the keys must be made consistently across elections.” Even if we boldly assume that elections are comparable as an apples to apples comparison (they’re not), that’d be all well and good, but if only he followed his own advice here… which we explore in a lot more depth in the section below questioning his 2016 “prediction.”

- Second, “once a decision is made for all thirteen keys, the system yields a definite prediction that serves to test the judgments made.” Allow us to paraphrase: “even if I predicted the election wrong… it means I just applied the keys wrong because the keys will get it right, and since I invented the keys, I was right anyway.”

- Third, “the keys have a successful track record.” This is a completely circular argument.

He’s spoken on the issue too, arguing subjectivity isn’t a concern because the keys are simply judgments – going so far as to say his system is “purely objective” and then blurring the lines between what subjectivity and objectivity are by arguing, fairly, that even the most objective measurements (like GDP growth) contain subjective determinations (like what is counted as economic activity). His broader point is probably right: purely “objective” models don’t work very well without accounting for “subjective” variables, and recent political modeling has been too reliant on “objective” factors, necessitating a more personal approach. Our own approach has been consistent with this philosophy, though we’d describe them as “quantitative” and “qualitative” factors, respectively. The aim is always to be objective.

But Lichtman’s application of the keys seems to fluctuate subjectively according to what fits the convenient narrative, or what the “successful track record” requires. Barack Obama received the charisma key in 2008 but not in 2012, while Bill Clinton, who was noted for his oratorical skills, never did because of the “dark cloud” cast by his history of alleged womanizing. Why are Obama’s stimulus and health care act considered a “major change in national policy” while Bill Clinton’s crime bill or welfare reform, or George W. Bush’s creation of a new government department and passage of No Child Left Behind (not to mention his own stimulus at the start of the Great Recession) are not? Over the course of the 2016 election, Lichtman said that the third party factor – which requires a third party to get 5% of the vote – was true but that the primary contest was indeterminate, then when it turned out in the results were that the third party did not get 5% of the vote, he was able to retroactively apply them to make the model work, despite his claims that “I’ve not retroactively changed any keys.”

In fact, even the seemingly objective keys fall apart under scrutiny. Lichtman’s short-term economy key is carefully caveated with a disclaimer: an economic downturn doesn’t need to meet the “narrow, technical definition of a recession,” instead, it depends on whether there is “the widespread perception of an economy mired in recession during the election campaign.” Suddenly that’s a pretty subjective call too, and one that looms large given recent opinion polling concerning the economy in 2024.

An Imperfect Record

Even if one were to agree with the way that Lichtman has determined the keys in the past, recent discussion of his prediction record conceals a glaring failure: the presidential election he is most famous for “correctly” predicting, in 2016, is also the only election call he missed.

To see why, we first have to turn to 2000. In 2012, U.S. News & World Report called him the “Never-Wrong Pundit.” But, in 2000, the first genuinely tight (in that no candidate ended up winning by greater than 5% margin) election since Lichtman began making predictions, he predicted that Al Gore would win.

Of course, George W. Bush won the presidency instead. But Lichtman had an explanation for this – the Keys “focus on national concerns such as economic performance, policy initiatives, social unrest, presidential scandal, and successes and failures in foreign affairs. Thus, they predict only the national popular vote and not the vote within individual states.” In fact, Lichtman said this has always been the case, because in all “three elections since 1860, where the popular vote diverged from the Electoral College… the Keys accurately predicted the popular vote winner,” he wrote in the first post-2000 edition of his book. But his actual paper predicting Gore’s victory never explicitly says this and claims only that “Democrats… will win three consecutive terms in the White House” – a meaningfully distinct claim from “Democrats will win the popular vote three times in a row!” But, the popular vote explanation in the wake of 2000 is more than just a convenient save by Lichtman here. It’s backed up by both the 1990 and 1996 editions of his book, in which he does clearly state that “[b]ecause the Keys to the White House diagnose the national political environment, they correlate with the popular balloting, not with the votes of individual states in the electoral college.” But at least from here we know that the rules have been set, his model survives as having made the “correct” call in 2000, because it only predicts the popular vote.In the years since, Lichtman has also claimed that the 2000 election was “stolen,” claiming that Gore would have won Florida (and therefore the presidency) by “tens of thousands of votes” if it weren’t for the disproportionately high number of ballots rejected in the state’s majority Black precincts. On first blush, this sounds like an evasion, but to be fair to Lichtman, he presented a report on these alleged disparities to the U.S. Commission on Civil Rights 2001, so whatever feelings one may have about his conclusions regarding 2000, they at least appear to be genuine.

However, there is still something to be said about how much Lichtman still leans on language like “I’ve forecasted all presidential elections correctly,” “This is a system that’s been right since 1984,” and “I have been predicting elections for 30 years correctly” even in the wake of this, as it comes with a sizable asterisk given he did fail to predict the winner of the election, even if he did predict the winner of the popular vote. But at least his emphasis on predicting the popular vote had precedent and could be backed up by his past work. The same cannot be said for what happened 16 years later.

In the 2016 election, when nearly every outlet seemed to bank on a Hillary Clinton victory, Lichtman seemingly stood alone in predicting a Trump win. When Trump ended up winning the presidency, Lichtman’s fame shot up, and it’s fair to say he enjoys much of his recent notoriety from the Trump call.

But, Trump didn’t win the popular vote… Clinton won it by 2.1%. By Lichtman’s own, consistent rule, he was wrong. Yet, he continues to enjoy recognition for his predictive accuracy – in 2016, Maureen Dowd called him the “only one major political historian” to predict Trump’s victory, and he continues to be invoked as the man who “correctly predicted every presidential race winner since 1984.” Both of these things cannot be true – he cannot claim to have correctly predicted both the 2000 election and the 2016 election – but a thorough effort to retcon and paper over the mistake of his 2016 call have allowed him to keep his ostensible authority.

On November 9, 2016, just after the election, Robert Siegel of NPR brought this inconsistency up in an interview with Lichtman:

SIEGEL: Now, a question about that winning streak of yours. If I understand it, you claim predicting Al Gore’s victory in 2000 as a win since he won the popular vote. But Hillary Clinton appears to also be winning the popular vote, and you don’t claim a loss for predicting Donald Trump.

LICHTMAN: Well, because I pointed out in this election while the keys certainly favor the defeat of the party holding the White House, that you also have the Donald Trump factor. First time I’ve ever qualified a prediction an out-of-the-box, history-smashing, unqualified candidate. So you had two forces colliding, which produced a win in the Electoral College, but essentially a tie, as far as I could tell, in the popular vote. We don’t know how it’s going to come out ultimately. So, in fact, the keys came as close as you can to a contradictory election.

This non-answer suggests “we don’t know how it’s going to come out ultimately,” as if Trump may still win the popular vote once more votes are counted, proving Lichtman right. He describes the 2016 popular vote as a “tie,” but George W. Bush in 2004 received around the same popular vote margin as Hillary Clinton in 2016, which Lichtman did not call a “tie,” nor in his books does he make any qualifications about slimmer popular vote victories in 1960, 1968, or 1976; in the 1888 election, where the popular vote winner did lose the electoral college, he wrote that “Cleveland won – as the keys would have predicted – a plurality of the popular vote.” Lichtman would later say, in 2020, that 2016 was a “whopping loss” in the popular vote for Trump.

And yet, there’s been little scrutiny of his 2016 call since. Part of the reason may be that, as he noted to NPR, Lichtman “qualified” his 2016 prediction. In the months leading up to the 2016 election, he repeatedly asserted that Trump was a “history-smasher” whose unusual characteristics meant that he could “change the patterns of history that have prevailed since the election of Abraham Lincoln in 1860.” Lichtman consistently said Trump was so “precedent-breaking” that he may “break the pattern of history and snatch defeat from the jaws of victory, despite my system.”

This “right if I’m wrong, wrong if I’m right” outlook is the first serious issue with Lichtman’s 2016 prediction. We don’t need to explain at length why someone who argues Trump will win… unless he doesn’t, in which case it’s because he’s special… has actually made no prediction!

The second issue is a bit more particular and in line with the critique that his keys are applied subjectively. In 2016, Lichtman said that the third party key had turned against the Democrats, but even in his final prediction said that the “serious contest for the incumbent party nomination” key was undetermined. As a rule, Lichtman has said that an uncontested nomination is “one in which the nominee wins at least two-thirds of the total delegate vote on the first ballot at the nominating convention.” Despite the fact that Bernie Sanders did receive about 40% of the delegate votes on the first ballot, he wrote that this remained undetermined because Sanders “endorsed” Clinton prior to the convention – or that a Trump victory would unite Democrats despite the primary contest. It didn’t end up mattering because the third party candidate got less than 5% of the vote, and, therefore – to make the keys work – the Sanders challenge did count… though, of course, this only became obvious after the election. Still, what was all this noncommittal equivocating about? Are the rules equitably applied over history or not?

But it is the third issue that is the most damning. Despite recent insistence that he had stopped predicting popular vote winners after 2000, Lichtman directly said the Keys were designed to do just that in the following sources, leading up to Election Day, 2016:

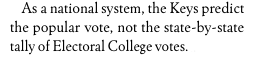

- His paper predicting each election between 2004 and 2012, which he publishes every four years in the journal Social Education. In 2012, for example, he wrote: “As a national system, the Keys predict the popular vote, not the state-by-state tally of Electoral College votes.”

- In Lichtman’s rebuttal to Nate Silver, published in 2011: “the keys are not designed to estimate percentages, but only popular vote winners and losers.”

- In the edition of his book released for the 2016 election, released in May 2016, where he writes: “they predict only the national popular vote and not the vote within individual states.”

- And, most clearly, in Lichtman’s published paper from October of 2016 predicting the outcome of the election, where he writes that “the Keys predict the popular vote, not the state-by-state tally of Electoral College votes.”

Additionally, in the original publication of Lichtman’s prediction by American University, and indeed up through at least July 2017, the article featured on the university’s website read that “Lichtman’s ‘13 Keys’ system predicts the outcome of the popular vote.” At some point afterwards, this text was changed to read “Lichtman’s ‘13 Keys’ system predicts the winner.” At some point between August 2020 and November 2020, but after the text change, an “Editor’s Note” was added, stating: “This story has been updated with a correction. It has been corrected to read that Prof. Lichtman’s 13 Keys system predicts the winner of the presidential race, not the outcome of the popular vote.” This is the version that persists on the American University site, even though it contradicts the books and papers Lichtman published between 2004 and 2016. And if we can’t trust that his books and papers reflect how the model is actually supposed to work, then what’s the point of publishing them?

Rewriting His Own History

Despite explicitly stating at least twice in 2016 that the Keys predict the popular vote, in October of 2020, Lichtman wrote in a post for the Harvard Data Science Review stating:

In 2016, I made the first modification of the Keys system since its inception in 1981. I did not change any of the keys themselves or the decision-rule that any six or more negative keys predicts the defeat of the party holding the White House. However, I have switched from predicting the popular vote winner to the Electoral College winner because of a major divergence in recent years between the two vote tallies.

This statement seems to imply that Lichtman made the modification before the 2016 election, even though every piece of evidence prior to that election clearly indicates it was only predicting the popular vote. Almost identical language appears in the 2020 edition (which is the most recent edition) of his book, Predicting the Next President: The Keys to the White House, and Lichtman claims his September 23, 2016 interview with The Washington Post as evidence (the piece only mentions Lichtman’s popular vote-predicting record and is full of extensive caveating, yet it still never clarifies that Lichtman ever “switched” prior to the election). It also doesn’t explain why an October 27, 2016 video with The Washington Post clarifies that his predictions pertain to the popular vote.

Curiously, Lichtman’s 2020 edition completely omits a chapter on the 2016 election, despite a chapter explaining the 2008, 2012, and 2020 elections (and chapters running through the keys for every other election starting with 1860). When asked, Lichtman said this was a “publisher glitch.” Whether glitch, typo, or oversight, the 2020 edition of his book – just like the 2016 edition – also notes that “Because the Keys to the White House diagnose the national political environment, they correlate with the popular balloting, not with the votes of individual states,” explaining that the Keys predict the “popular vote.”

In the times where his 2016 prediction has come up or he’s been directly confronted about it, like on his weekly live chats, he’s danced around the question by saying “it’s irrelevant,” or pivoting to suggesting how “since 2000, the popular vote hasn’t meant anything… in recent years I’ve just predicted the winners.” “I don’t bother with the popular vote,” and “predicting the popular vote is useless,” he’s added in other chats. In one chat, he answered, “Ever since 2000 you know I’ve just been picking the winner.” In another live chat hosted on June 13 of this year, Lichtman and his co-host, his son Sam Lichtman, went as far as to claim that in his September 23, 2016 interview with the Washington Post, he said he was “just picking the winner.” While it’s true that Lichtman never explicitly states in that video that he’s picking the winner of the popular vote, he also never qualifies that he’s no longer picking the popular vote winner. More to the point, he goes step by step through the Keys to illustrate why they favor a Trump victory. Considering that in his October 2016 paper for Social Education, he explicitly states that the Keys are meant to “predict the popular vote, not the state-by-state tally of Electoral College votes,” any reasonable person would assume that any prediction he made using the Keys would therefore be predicting the popular vote (The Postrider reached out to the producer of the Washington Post video for comment, but received no reply).

You’ll also note that in the 2020 book and Harvard Data Review article, Lichtman says he made the “first modification” of the Keys in 2016, not something done “since 2000,” but he still does not mention that his system was ever modified when asked in the hours after the 2016 election in the NPR interview. Even if one were to accept that these references to the popular vote were the result of people other than Lichtman misunderstanding his model, it’s worth once again pointing out that he was still explicitly making the claim that his model predicts only the popular vote as late in October of 2016, in his paper titled “The Keys to the White House,” published in October of 2016:

Identical, or similar, language exists in his 2004, 2008, and 2012 predictions. His 2008 prediction makes it abundantly clear that it only predicts the popular vote (“Win indicates the popular vote outcome for the party in power”), as does the 2012 prediction, so the claims that he switched to merely picking Electoral College winners “since 2000” fall apart.

From Lichtman’s 2012 prediction

We attempted to reach Lichtman ourselves last week and had initially scheduled an interview with him for 1 PM on Monday, June 17th. Approximately an hour before the interview, Lichtman canceled, writing: “For explanations and updates on the Keys please see my live show every Thursday at 9pm DC time, @allanlichtmanyoutube. Also, the latest edition of the book will be out shortly.” When asked if he would be open to rescheduling the interview for later in the week or answering questions via email, he replied that he would be “happy via email to answer any questions about my 2024 analysis and your 2024 model.” At 2:24 PM, we replied to that email with our questions regarding his 2016 prediction, citing the contradictions identified in this article. At 2:25 PM, he replied, “All the answers about 2016 are in my book.” At 2:28 PM, he followed up on that message, saying, “The latest will be out shortly and can be preordered on Amazon.”

On Monday morning, we also reached out to the author of the edited article on American University’s website asking if she would be able to tell us why the editor’s note about the popular vote was added to the article. She replied that she made the correction, writing, “In the article, Prof. Lichtman does not discuss popular vote, and is quoted as saying, ‘The Keys point to a Donald Trump victory, and in general, point to a generic Republican victory.’” This is true, but it doesn’t change the fact that Lichtman claimed the Keys were designed to predict the popular vote in his October 2016 Social Education article, nor does it explain his denial that he had explicitly predicted the popular vote winner in 2004, 2008, and 2012 as well.

Betraying His Own Model

In the years since 2016, Lichtman has also explained that a “radical change” in the demography of the country means that the popular vote has diverged so substantially from the electoral college that the system now just predicts who wins the election, rather than the popular vote. “In any close election,” wrote Lichtman in 2020, “Democrats will win the popular vote, but not necessarily the Electoral College. That’s why it is no longer useful to predict the popular vote rather than just the winner of the presidency.” This is an update that allows him to, conveniently, be right in both 2000 and in 2016, if just retrospectively (he did not issue this explanation until after Trump lost the popular vote). That’s a pretty convenient caveat, especially given his “no, no! I wasn’t wrong, I correctly predicted that Al Gore would win the popular vote,” clarification from 2000, and it doesn’t quite pass the smell test.

When asked in a September 2023 live chat how the Keys would change if elections were decided by the popular vote instead, he responded “it’s an impossible question because it’s not going to happen.” For a model that allegedly predicts and conforms to 160 years’ worth of presidential elections based on the popular vote, that’s quite an about-face. Only suddenly, after 2016, is the popular vote completely irrelevant, even though Lichtman even stressed the model’s historical strength in his 2004 prediction:

As a nationally-based system the Keys cannot diagnose the results in individual states and thus are more attuned to the popular vote than the Electoral College results. The 2000 election, however, was the first time since 1888 that the popular vote verdict diverged from the Electoral College results. And the Keys still got the popular vote right in 2000, just as they did in 1888 when Democrat Grover Cleveland won the national tally but lost in the Electoral College to Republican Benjamin Harrison and in 1876 when Democrat Samuel Tilden won the popular vote but lost the Electoral College vote to Republican Rutherford B. Hayes.

It also makes his entire model incredibly dubious – you don’t get to claim that, in a “very robust model” based on the “very powerful” “force of history” which “takes into account enormous changes in our politics” (all things Lichtman has insisted upon), that we are now all of a sudden in a completely novel or new environment where the rules no longer apply. Especially when Lichtman has said, even in the wake of the 2020 election, “Would the pattern of history hold? And of course it did hold.” So, either your system is infallible because it relies on the powerful force of history, or it’s not; either your Keys are a “national system” predicting the winner of the popular vote (like he said they did in October 2016), or they predict the winner of the Electoral College; either you were wrong in 2000, or you were wrong in 2016. You don’t get to have it both ways, just because it helps you preserve a perfect prediction record.

Don’t Miss a Thing: Get Our Content in Your Inbox!

Some outlets, such as The Guardian and USA Today, have moved to more accurately qualify his predictions in favor of his 2016 prediction (the one that is the most objectively wrong) rather than his 2000 prediction (which, based on his own, clearly communicated rules, he actually got right), writing that he’s been right “nine of the past 10 (and even the one that got away, in 2000, he insists was stolen from Al Gore)” elections, while calling his Trump “prediction” a “striking prophecy” or referring to him as a “Nostradamus.”

But no major outlet has seriously investigated the ways that Lichtman has contradicted himself in the wake of his half-correct 2016 prediction. This has resulted in a nonsensical double bind wherein Lichtman continues to receive credit for designing a model with a perfect record, even though the caveats and adjustments that would have made his unbeaten streak possible do not appear to have existed until after the 2016 election. That means, to this day, outlets still champion him as having an “ace record” on predicting elections, even though a few hours of research would reveal that he appears to have retroactively changed what it is his model is meant to predict in the first place as a means of preserving his dubious 10 for 10 streak.

In other words, Lichtman, the former chair of American University’s Department of History, is literally trying to rewrite history in favor of his own record, and most of the press he’s received since 2016 is, wittingly or not, helping him do so.

The Cost of Chasing a Perfect Record

Predicting elections well is hard, detail-oriented work. It takes careful thought and an ability to improve and adjust over time. In the United States, a diverse country with many jurisdictions holding individual elections simultaneously, it also takes a bottom-up approach that does account for the differences in the various states and districts which elect the president. But above all, it takes the same hallmark qualities expected of quality academia – the ability to learn, grow, and self-correct. By failing to do so, and instead digging in on insisting he’s always been right, Allan Lichtman has let down both political scientists and academia as a whole.

There are two ironies of Lichtman’s apparent retconning of his prediction record. The first is that, whether or not his model actually backs up his prediction, he does deserve at least some credit for predicting a Trump victory, and doing so in such a public fashion. As late as November 5, 2016, HuffPost’s Ryan Grim accused Nate Silver, then of FiveThirtyEight, of “making a mockery of the very forecasting industry that he popularized” for designing a model that showed Trump had a 35% chance of winning the election. At least until the results came in, Lichtman stuck to his guns and trusted his own model, even though it ran contrary to the polls and predicted an outcome many thought was unthinkable. He appears to have fudged his prediction in the years since to make himself seem more right than he actually was, but it’s at least true that he saw something that most in the pundit class did not. He may not have been completely correct, but he was more correct than most observers (including the authors of this piece) who were confident in a Clinton victory.

The second irony is that he also recognized that Trump’s election had the potential to change both the effects of American politics, as well as his own prediction model.

“I do think this election has the potential to shatter the normal boundaries of history and reset everything, including, perhaps, reset the Keys,” Lichtman told The Washington Post on October 27, 2016. “Look, I’m not a psychic. I don’t look in a crystal ball. The Keys are based on history, and they’re based on a lot of changes in history, they’re very robust. But there can come a time when change is so cataclysmic that it changes the fundamentals of how we do our politics. And this election has the potential.”

But, for whatever reason, when the Keys failed to predict Lichtman’s stated outcome, rather than admit that a model developed before the end of the Cold War and informed by data from before the abolition of slavery may be in need of some adjustment, he decided to ignore his past statements that he was predicting the popular vote and take credit for being half right, anyway.

Beyond Lichtman, this whole affair reflects poorly on American University, which receives positive press from Lichtman’s dubious prediction record, but fails to provide serious scrutiny or accountability for its own professor. Universities are critical institutions, shaping discourse and ultimately society, and it is these responsibilities that makes it incumbent on them to be accountable and adaptable as new data and information emerges, skeptical to any singular soothsayer and primed to make corrections when one is merited. American’s apparent complicity in the retconning of Lichtman’s record is negligent at best and cynical at worst, and doubly irresponsible in an era in which conservative politicians have redoubled their efforts to undercut the public’s trust in higher education. The same could be said for the journalistic outlets who have credulously trumpeted Lichtman’s “perfect” record since 2016, especially The New York Times, who allowed Lichtman to make the unsubstantiated claim that he had moved on to only picking Electoral College winners since 2000 in a video featuring his 2020 prediction (The Postrider reached out to the producer of this video for comment, but received no reply).

Even if Lichtman had correctly predicted every single election, which he hasn’t, his unwillingness to change or adjust should give any acolyte of his some pause. The housing market always grew… until it didn’t. Joe DiMaggio kept recording hits… until he didn’t. And Democrats were sure to win Wisconsin… until they didn’t. Clinging to past successes without the willingness to adapt and improve does a disservice to serious political analysis and historical assessment. The academic world, and the institutions that support it, must prioritize rigorous scrutiny and a commitment to continuous learning over the allure of a perfect record or the comfort of unchanging methods. It is not the infallibility of predictions that advances any discipline, but an enduring capacity to question, to evolve, and to recognize that every system – no matter how successful it appears – is vulnerable to failure if it fails to self-reflect and change. A professor of history should know better.

You can read Professor Lichtman’s response here.

You can read our follow-up reporting debunking his later-alleged timeline here.